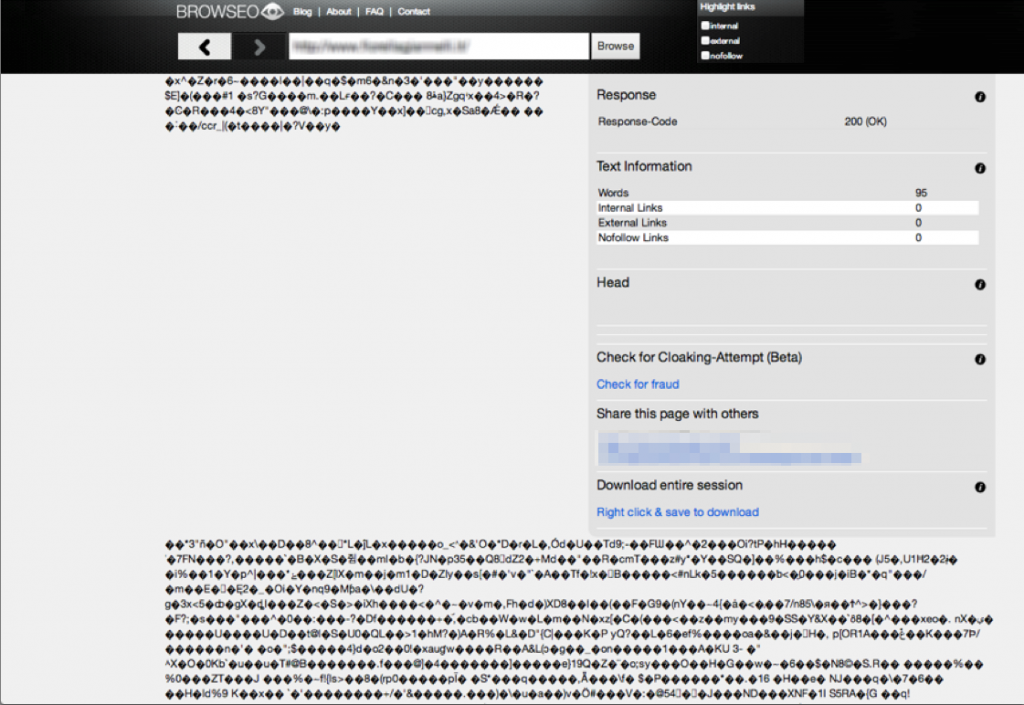

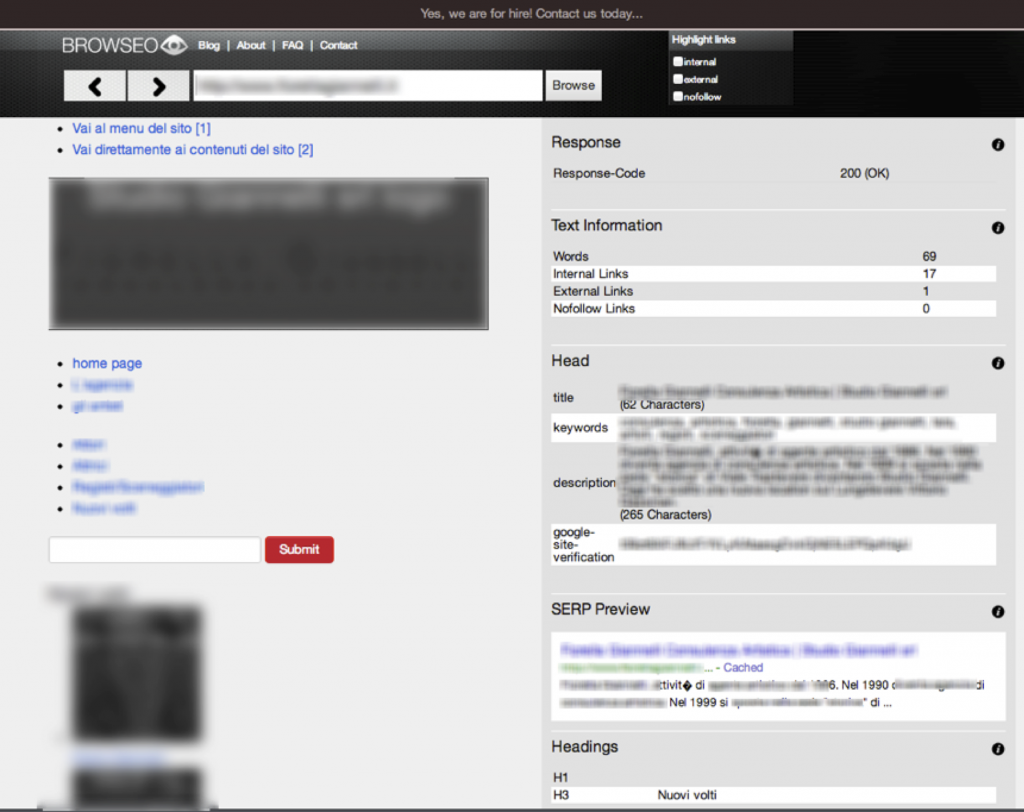

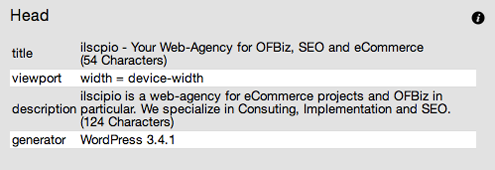

Given that this is a site with an awesome tool for viewing a website (as a search engine would), it seemed only fitting that we should take things a step further. Many in the search optimization world believe they 'get it', but one can't be too sure.

A lot of the time we hear about things like 'links', 'meta tags' 'title' elements. But that's really a limited view of what search engines (like the almighty Googly) are doing when they assess your site. I thought it would be an interesting exercise to take a bit deeper walk through the woods.

Walk with me....

Pages v Websites

One of the first things we need to understand is there is indeed elements that differ between a page and the entire domain. This one is interesting in that many times I get the sense that SEO folks don't always understand that. In fact, most things that a search engine does is actually on a page level, not the domain level.

In fact, outside of links, the only really important areas that tend to be site-wide are Trust elements, classifications (topical etc), internal link ratios and geo-localized ones. By and large, a search engine actually see's your site on a page by page basis. This is the first important distinction to keep in mind.

Site-Level Signals

But what things does Google see on the site level?

Authority/Trust; this is the all-encompassing concept of not only what you do on your site (outbound links, web spam, sneaky redirects, thin content etc) but what is going on off-site (link spam, social spam etc). What level of trust does your website have in the eyes of the search engine? This is something that is incredible hard to build, but easy to lose.

Thin Content (formally known as Panda); while related to the above, it's worth having on it's own. Large amounts of thin content and/or dulpication could end up in dampening of sections or entire sites. We can also consider the GooPLA (Google Page Layout Algorithm) conepts here as well.

Classifications; while this does generally exist more on the page level, there are categorical elements for an entire website. These can also contain more granular elements as well. From an ecommerce site (or subdomain etc) to a given market. This is where strong architecture can become your best friend. Assist the search engine in understanding (and classifying) what your site and it's various parts are about.

Internal link ratios; in simplest terms you want to show a search engine the importance of pages through internal links. Linking to the most important pages the most, the least important the least. This can often lead to page mapping issues (wrong target page ranking).

Localization; another element this is more site-wide in nature is localization. Meaning; if applicable, where does this entity reside? What areas do they service? We can even consider geographic targets for sites not directly geo-location related. Elements here can include the top level domain, language etc.

Entities; an entity is a person, place or thing. One needs to look no further than Google's knowledge graph to see the importance they are seemingly placing on these over the years. If it is brands on the page (ecommerce) or citations in an informational piece, make them prominent. Also, Google seems bullish on authorship of late, so also consider the company entities (people) and how they can be leveraged. Your site can 'be' an entity as well as having sub-entities and associations throughout.

Domain history; Matt Cutts recently talked about how a domain that's REALLY had issues, might even carry penalties after someone else buys it. Given that, we do know to some extent Google can potentially look at the domain history in classifying a website. This of course plays back into the above 'trust' elements.

Page Level Signals

As I mentioned earlier, Google (and most search engines) often look at things on a page by page level, not site-wide. This is an important distinction that often SEO types seem to forget. They crawl PAGES.

What elements might a search engine look at on the page level?

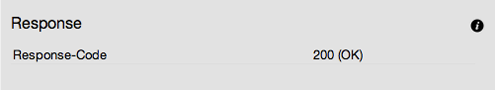

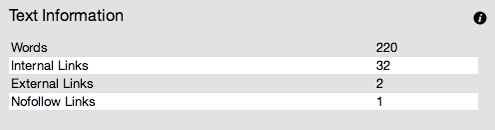

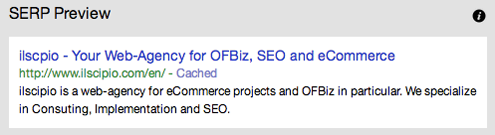

Meta-data; the easiest one here is of course the header data from TITLE to Meta-descriptions (not a ranking factor) and even canonical and other tags. Some of these elements can be ranking factors while others (rel=canonical for example) can tell Google how to treat the page.

Classifications (and Localization); much like the site level elements pages themselves will fall under classifications. It could be content type, (informational, transactional etc), by intent (commercial, knowledge), localization (about a given region) etc. Ensure you communicate the intent and core elements of a given page.

Entities; Again, as with the site-level, entities can also be associated with a given page on the site. In fact, Google potentially now looks at associating query inference (medical symptoms) and an entity (the condition itself).

Authority/trust (external links); beyond the above mentioned authorship elements, trust signals can also bee seen in what you link to (as well as citations). It can be a positive or a negative. Take the opportunity where you can to associate the page with other authoritative web identities.

Temporal signals; on the page level, Google is potentially looking at elements such as; Document inception/age, freshness, (QDF et al), niche trends, content update rates and more. They might even look at historic query and click data.

Semantic signals; web pages have words right? Search engines love words, yea? Then be sure that some form of semantic analysis is going on for the page including categorization of content, related term/phrase ratios, citations and more.

Linguistic indicators (language and nuances); of course as part of the above classification methods, the page can also help through closer demographic identification through the language on the page.

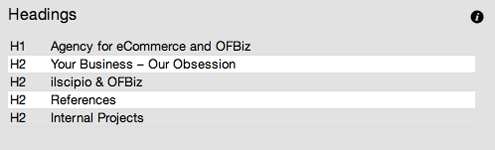

Prominence factors; another area that doesn't get a whole lot of attention but is know to show up over the years in various patents are elements such as; headings (h1-h5), bold, lists (and possibly italics). They don't likely weight heavily, but are indeed worth consideration.

Oh and I do not jest when I say there's more. We're sticking to some of the higher level parts to get the point across. We've likely already lost ½ the readers that started this piece. Thanks for sticking around.

The off-site stuff

While the focus of this offering was more about the on-site bits, Google also see's the off-site activities as part of it's perception. From authority and topicality to demographics and categorizations, it does come into play as far as how Google perceives you as an entity/set of entities.

These can include....

Link related factors;

- PageRank (or relative nodal link valuation)

- Link text (internal and external)

- Link relevance (global and page)

- Also; Temporal, Personalized PageRank and Semantic analysis

Temporal;

- Link velocity

- Link age

- Entity citation frequency

- social visibility

Authority/Trust;

- Citations

- Co-citations

- TrustRank type signals

Reach;

- Links

- News

- Social

- Video

You get the idea. I don't really want to focus on the off-site elements as much today. We're looking at these as they do fall into 'how Google see's your website'. It's just not the focus...

Moving along...

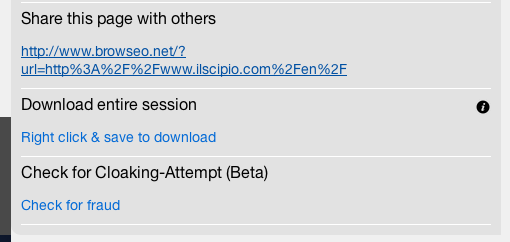

The Spam Connection

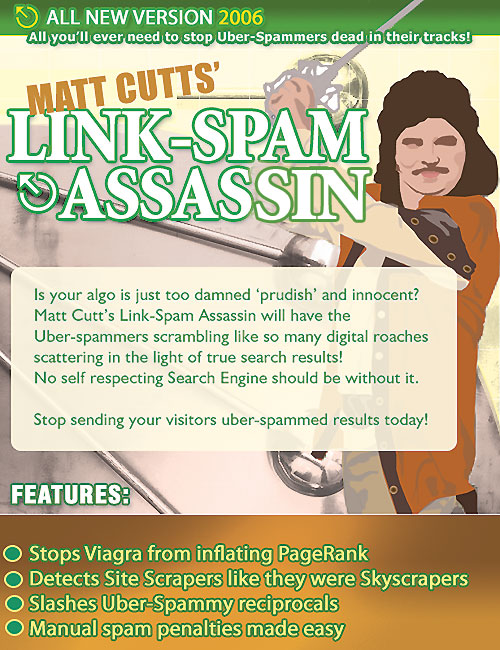

Another loosely related element of how Google perceives the site is of course; adversarial information retrieval. Better known as search and destroy for web spam. While understanding ranking factors is a great idea, it is also good to know things that might get one dampened as well.

Web spam generally breaks into two categories;

- Boosting; tactics used to increase a ranking (link spam for example)

- Hiding; tactics used to mislead or trick the search engine (cloaking for example)

Again, this isn't the core focus, so be sure to read my post on web spam for more. Some common spam fighting elements include;

Content Spam

- Language:

- Domain:

- Words per page:

- Keywords in page TITLE:

- Amount of anchor text:

- Fraction of visible content:

- Compressibility:

- Globally popular words:

- Query spam:

- Host-level spam

- Phrase-based

Link Spam

- TrustRank:

- Link stuffing:

- Nepotistic links:

- Topological spamming (link farms):

- Temporal anomalies:

We're all now fairly familiar with Panda (type) and Penguin devaluations as well as manual actions such as the Unnatural Links messages. But one should also be cognisant of the myriad of other ways Google might be looking at your site, in terms of how spammy it is.

SEO is dead

Right? Ok, maybe not.

Your mission my friends, should you choose to accept it, is to develop an SEO strategy that covers off all the elements within this post. Because that my friend, is what the savvy search optimizer should be doing.

I could sit here and explain to you ways to leverage them all, but this is an article not a book.

My goal here today was to bring light to the complexity of our reality. If you're a website owner, SEO enthusiast or hard core optimizing guru. Never become myopic on how Google really works. See the forest, see the trees and even the leaves.

As you were.....

Images by David Harry

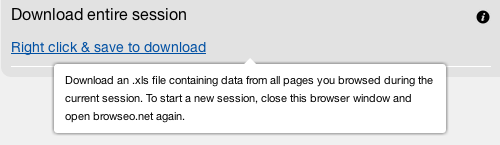

"A one-click tool that shows basic but important SEO metrics in an easy-to-read format."

"A one-click tool that shows basic but important SEO metrics in an easy-to-read format." "Browseo fills a crucial need; seeing the web like an engine. Thanks to Jonathan and the team for making it easy." — Rand Fishkin

"Browseo fills a crucial need; seeing the web like an engine. Thanks to Jonathan and the team for making it easy." — Rand Fishkin "It's smart, simple and effective... nice combo!"

"It's smart, simple and effective... nice combo!"